CHATBOTS, ROBOTS, AND Artificial agents…oh my!

an exploration into the world of CUIs

CUIs are conversational user interfaces that emulate human-to-human conversations, but with a computer. To have a human-life conversation with a computer, the system uses natural language processing (NLP) to understand, analyze, and build meaning behind what a user is trying to say. We dived into three studies in an attempt to grasp the complexities and potentialities of CUIs.

Study 1: Build a Bot

We started our research by building our own chatbot. We interviewed each other to find a “need” for our bot, developed a personality, and wrote sample scripts before we even started to “code” the bot. We imagined out bot to be thoughtful, insightful, helpful, friendly, warm-hearted, relatable, observant, reflective, funny, and brief. We even wrote a brief job description for our bot, who we later named Mookie.

Study 1: Build a Bot

We started our research by building our own chatbot. We interviewed each other to find a “need” for our bot, developed a personality, and wrote sample scripts before we even started to “code” the bot. We imagined out bot to be thoughtful, insightful, helpful, friendly, warm-hearted, relatable, observant, reflective, funny, and brief. We even wrote a brief job description for our bot, who we later named Mookie.

“Everybody goes about their day experience a range of emotions. Sometimes we’re nervous, we doubt ourselves and overthink. We may thrive in one environment, like as a leader at work, while falling short in another environment, like when we have to present a project at school. Somewhere in the mess that we call life, sometimes we just need someone to check in on us, see how we are doing. Whether it be to motivate us or calm us down, this person will be focused on us and our well-being. It’s part therapist, part best friend, part hype-man.”

Our conversational flow diagram:

Finally, we translated Mookie’s personality and sample conversation into Dialogflow and Mookie began to come to life. You can interact with Mookie here. I also wrote more about this experience on This + That.

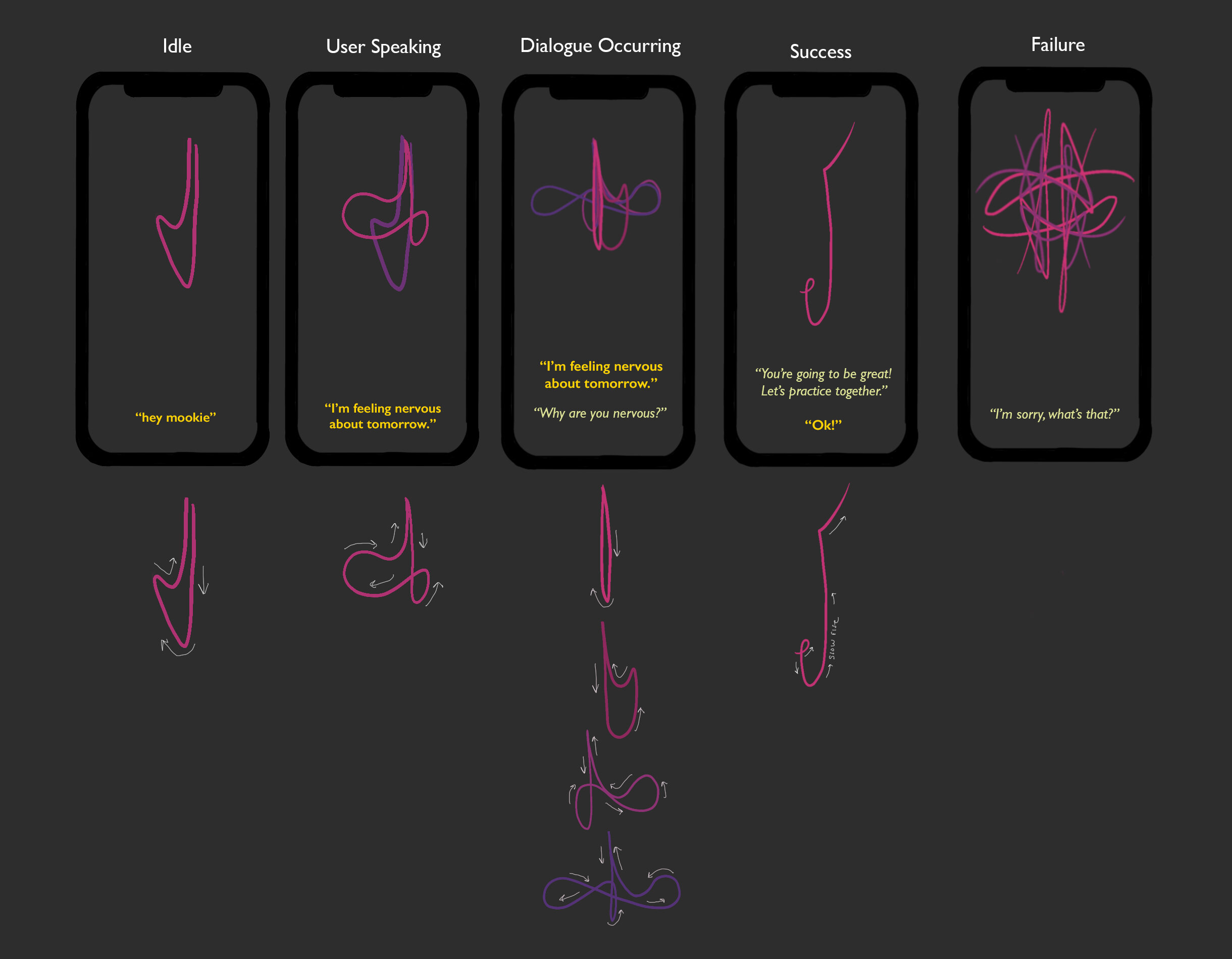

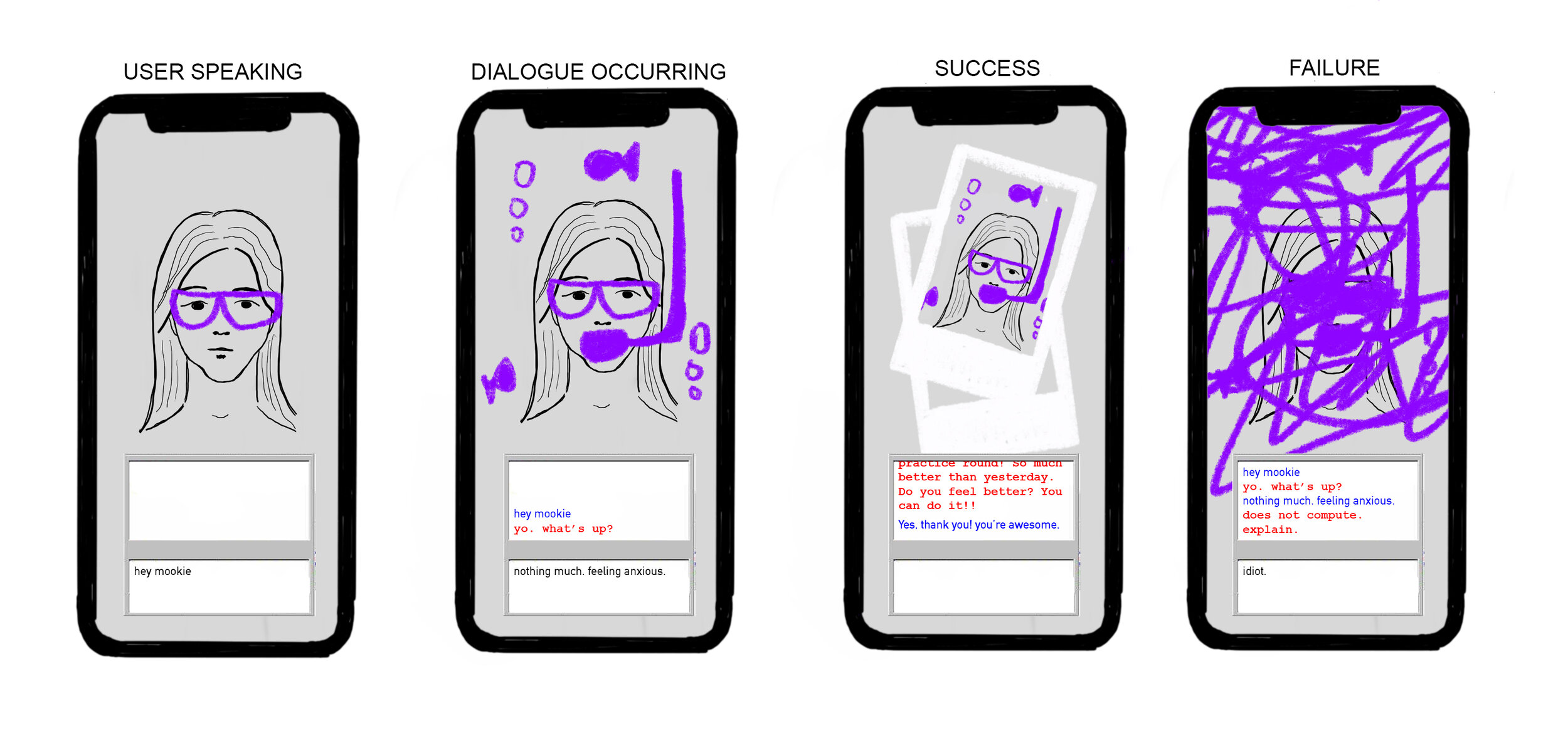

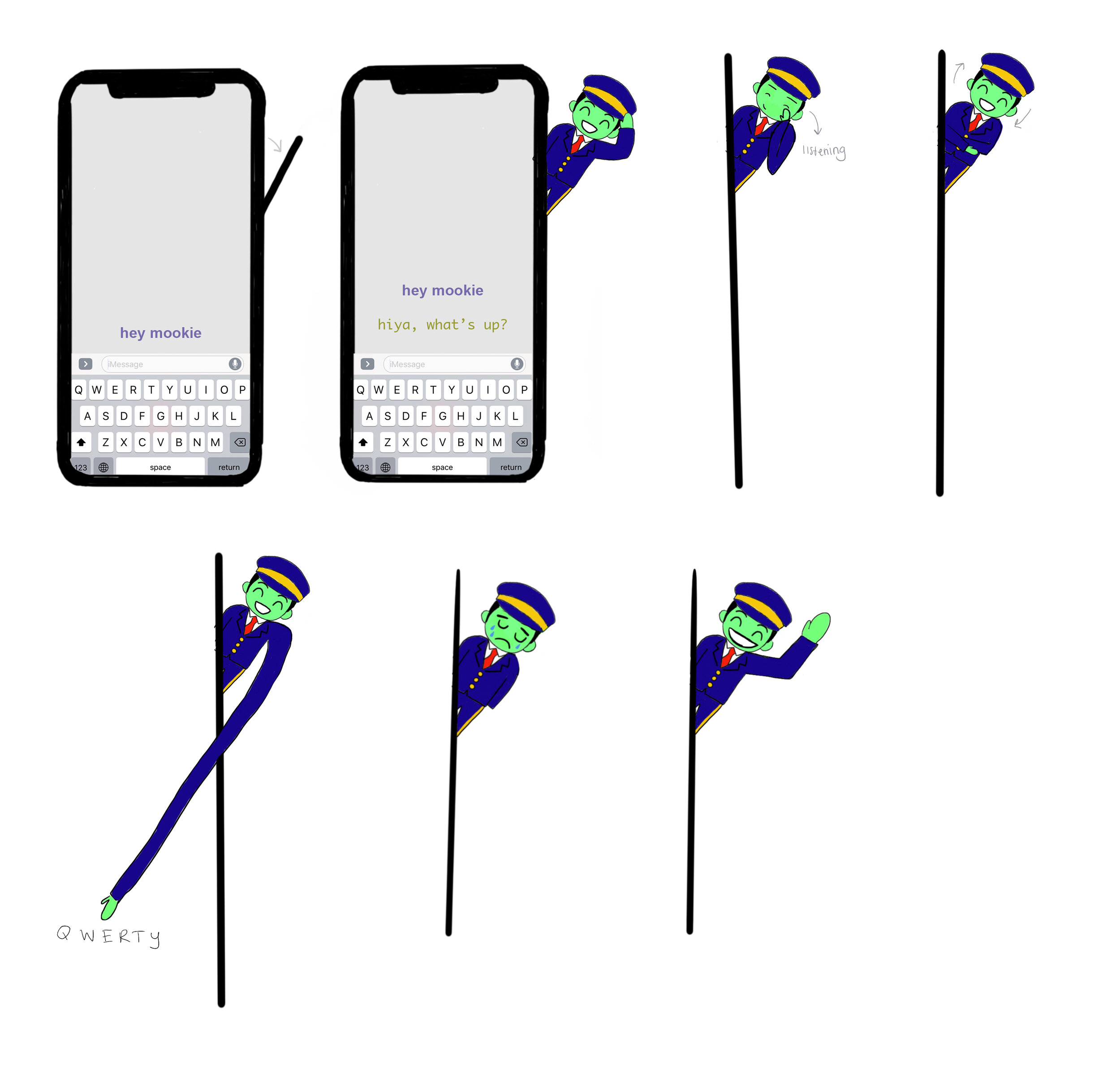

STUDY 2: Push Your Visual Range

Now that we began to understand the linguistic basics behind CUIs, we switched gears to the visual side of these interfaces. How might the CUI express itself visually? The focus of this study was to visually depict four states of the CUI: when a user is speaking, when a dialogue is occurring, when the CUI is successful and when it fails. During my initial research, I found inspiration in musical conductors, station agents, movies, and nature.

Ultimately I came up with three different explorations of a visual narrative.

Study 3: A Critical Look

The final study required us to think critically about our relationship to conversational interfaces. The visuals created in this study would directly attempt to explore and answer my primary research question:

How might the design of a CUI interface infiltrate a user’s personal conversational space?

I decided to have my CUI interface live on an Apple Watch (or comparable wearable) because the user wouldn’t be required to physically interact with the CUI (touch screen, open an application, etc). I imagined a more seamless interaction, one that replicated human-to-human interaction so the user would almost forget they were corresponding with a bot. I initially explored more ambiguous shapes for the CUI states, but my abstract forms were not translating as well as I hoped. Also, the more successful visuals from Study 2 were hypothesized to live on a mobile device, so they didn’t translate well to the smaller, watch frame. I kept finding that these early visuals did not add anything to my research question and I needed to go back to the drawing board.

I kept note of my day and interviewed others in hopes of getting a better idea of a user’s journey when a user is a) alone and b) may speak out loud to oneself. I still did not want to create a task-oriented bot so it was important the bot be activated even without and introduction. While conducting my research, I kept thinking of family members and friends with dogs and how people in general talk to their dogs. Dogs communicate well through body language, are friendly, recognizable even in a more abstract/animated form, and convey a level of happiness upon interaction.